My research is focused on image/shape analysis, machine learning, and computer vision. Content-based retrieval has been the central application of this focus since my PhD work and thereafter. I'm interested in both content description and statistical learning dimensions of the retrieval problem. I have several contributions particularly in the 3D object retrieval subdomain [ see publications ].

I'm attracted by vision problems where one can invoke machine learning and data mining methods. Content-based retrieval is one such application domain but I'm equally interested in related problems such as object categorization and visual scene understanding. I envision an ideal visual inference system (VIS) that would meet the following requirements:

- VIS admits a rich representation incorporating all the visual cues (shape, color, texture, motion) in its inference mechanisms,

- VIS contains generative models of these cues conditioned on object, scene, structure, and background categories,

- VIS is able to understand the general theme of a scene (outdoor/indoor, nature/urban, arts, sports, daily life,...) at the basic level, but it can also be flexibly driven to a specific task such as detecting and localizing a particular object category (faces, cars,...) when it's told to do so,

- VIS resorts to both generative and discriminative learning models in a selective way, i.e., it's able to choose the most useful model for a specific task, - this also applies to the type of the visual cue that should be used,

- VIS is able to tell how much it's confident about its predictions.

There are certainly more items to add to this list and it's very probable that such omnipotent systems are not eventual realities in the near future. Nevertheless, I think that keeping such a holistic view of the affairs can help us build better systems than the existing ones.

Here is a list of selected projects that I have been working on since 2002. Those marked * are funded projects, while the others are motivated by personal interests at the outset.

- Vispera Information Technologies Projects*

- Vistek ISRA Vision Projects* (2010-2013)

- CaReRa* (2011-current)

- IRonDB* (2009)

- DIRAC* (2008)

- 3D Object Retrieval* (2004-2007)

- Image Analysis and Segmentation (2004-2006)

- Exploratory Data Analysis for fNIRS Signals* (2002-2004)

- Exploratory Data Analysis Methods for Image Processing (2002-2004)

- Automated Fish Classification from Underwater Images

You may scroll down to read brief descriptions. Related publications can be found here.

Vispera Information Technologies Projects

Please visit Vispera for further information.

Vistek ISRA Vision Projects (2010-2013)

At Vistek ISRA Vision, I was in charge of several industrial and European-funded R&D projects. Please visit Vistek ISRA Vision for further details.

Human-Centric Computer Vision: Looking at People

Humans as moving and reasoning entities constitute the most favorite subjects of other humans. People observe and recognize (the traits of) others, people try to understand what others do, people interact with others. People's most important asset in all these activities is their visual system. If computer vision is to mimic human visual system, then there is no surprise in that "looking at people" constitutes one of the most important computer vision challenges. In the "Looking at People" area of computer vision, research revolves around such topics as human detection and tracking, facial image analysis and visual profiling, change detection, human pose estimation, human activity monitoring and human behavior understanding, which I collectively denominate as human-centric computer vision. Since early 2010, this branch of computer vision has been one of the main targets of my research activities in Vistek ISRA Vision in the context of several EU-funded projects.

An emerging niche offering a great potential for computer vision-based human activity monitoring resides within the healthcare domain. The increase in average lifespan across the world in the large has been accompanied by an unprecedented upsurge in the occurrence of age-related mental disorders with high socio-economic costs. The concept of personal healthcare reveals as a promising paradigm for dealing with such problems in a meaningful and sustainable manner, enabling senior people to maintain independence and inclusion in society, while improving their quality of life and the effectiveness of their caregivers. Monitoring of daily activities, lifestyle, behavior, in combination with medical data, provides clinicians a comprehensive "image" of the condition and its progression, without their being physically present, allowing remote care of their patient. In the construction of the clinician's "image" of the patient, computerized visual analysis of humans can naturally provide valuable parameters. In Vistek ISRA Vision, as senior researcher and R&D Director, I was involved with three concurrent projects in this novel line of multidisciplinary research: ViPSafe, Dem@Care, and JADE projects. While ViPSafe is a relatively small-scale project, funded by TÜBITAK and German Research Council BMBF, focusing on low-level algorithm development in elderly people detection and tracking as well as emergency monitoring; Dem@Care is a EU-FP7 large-scale integrated project with an ambitious goal of developing a fully-fledged remote monitoring system leveraged by multiple sensors and closing the interaction loop for both the clinician and the patient. JADE is a EU Regions of Knowledge project funded by FP7 Capacities action, aiming to encourage a strategic and integrated approach among healthcare decision makers and stakeholders to promote the paradigm of personalized healthcare systems. I was the local coordinator of these projects and collaborate with significant players of the European research ecosystem such as Philips Research from The Netherlands, INRIA and Universit?de Bordeaux from France, IBM Israel, CERTH from Greece, Karlsruhe Institute of Technology from Germany to name a few.

Human-centric computer vision will enable applications also in the consumer domain. The concept of user-specific advertisement is now an established marketing approach especially in media and Internet businesses. In the ViCoMo Project, funded by ITEA 2 - a EUREKA cluster programme, we developed computer vision algorithms for gender classification, smile detection and age estimation from facial images and video frames, broadening the scope of user-specific advertisement to daily life, say to people wandering in shopping malls and markets. In the context of ViCoMo, teaming up with ViNotion - a TU/Eindhoven spin-off from The Netherlands, and VTT - one of the major research institutions in Finland, we successfully demonstrated this concept at the yearly ITEA symposia in 2010 and 2011. ViCoMo, in its full extent, is also concerned with such research issues as multi-camera video analysis and 3D environment modeling in order to leverage human-centric computer vision systems with contextual information. In ViCoMo as in other international projects, I collaborated with renowned research institutes and technology companies in Europe such as INRIA (France), TU/Eindhoven (The Netherlands), VTT (Finland), Thales (France), and Philips Research (The Netherlands).

Machine Vision-Based Industrial Inspection and Measurement

Industrial applications of machine vision differ from human-centric computer vision in that the vision system designer has much greater control on the environment, on the lighting conditions, on how the object is handled for imaging and on the process flow. This is advantageous as one can steer the solution by controlling the environment (and vice versa) to meet the performance requirements, which, on the other hand, are way more stringent and demanding than, say, in consumer domain or in visual information retrieval. A part validation task in factory automation might require in general 99% pattern recognition performance if not 100%, a measurement task might have to be performed with a precision easily on the order of microns, a surface inspection task might not be deemed successful with even 1% false alarm. Since the beginning of 2010, as vision solution architect and end-to-end system designer at Vistek ISRA Vision, I had the occasion of using and adapting a battery of geometry and appearance-based image and shape description tools on several industrial tasks.

The sheet metal inspection system that we have built in the Renault factory in Bursa is able to detect surface defects in real-time with acceptable false alarm. An extension of the algorithms that I developed and implemented for this system can prospectively be used on textile surfaces having complex visual backgrounds (which actually pose a significant research challenge). The LPG tank surface inspection system that we built in the Aygaz refill facilities in Istanbul uses geometry-based processing and is able to detect several tank metal surface defects with minute precision. Another tough material for inspection tasks is glass. We developed, built and deployed three consumer glass quality inspection machines for Pasabahçe Sisecam targeting a list of 200 glass surface defects and several precision measurements in real-time for line speeds reaching as high as 200 items per minute. For Ford Otosan factory in Gölcük, we integrated an active sheet-of-light 3D depth imaging based pattern matching system for tire and rim traceability. Other instances of my industrial automation projects was a multi-camera, high-accuracy, high-precision distance measurement system for Arçelik fridge factory in Eskisehir, and a color measurement system for the Yunsa factory in Cerkezkoy.

CaReRa* (2011-current)

CaReRa is a BU/EE VAVlab project on content-based case retrieval in radiology databases funded by TÜBITAK. It constitutes the most recent medical-related visual information retrieval project I am involved with. CaReRa is unique and innovative as it aims at going one step further than classical content-based image retrieval. A case in the medical world consists not only of radiological images but also of various other types of information. Accordingly, CaReRa takes it as a challenge to augment the description problem to cover non-image meta-data as well as radiological images. A concomitant research problem thus arises as how to measure the similarity between cases. I currently work on case description and case similarity aspects of the CaReRa project.

IRonDB* (2009)

IRonDB was a joint project between Philips Research Europe and Sabancı University (Istanbul) funded by EU 6th Framework Marie Curie Industry-Academia Partnership Programme. In this project, we dealt with MR-based brain image analysis, indexing, and retrieval for neurodegenerative diseases like Alzheimer’s. Our goal was to provide automated image analysis and decision support tools that would assist the medical practitioner in processing and reasoning with large brain MR datasets.

In IRonDB, on one hand, we dealt with the problem of detecting, localizing, and quantifying MR image structures that result from neurodegenerative abnormalities. What makes this problem really tough is the high inter/intra-subject variability exhibited by the MR scans. Despite this challenge, we think there exist some regularities in disease progression, which can be discovered by data mining methods.

On the other hand, we tried to find intelligent ways of integrating visual similarity information with non-visual meta-data in a user-interactive retrieval context. For instance, neuropsychiatric test (NPT) scores are widely used prior to diagnostic decisions and they prove to be very useful. As such, when augmented with adequate processing of visual information, statistical inference based on NPTs offer a promising direction to follow for the early diagnosis of mental disorders. Our CIVR?9 paper presents an interesting application of the well known relevance feedback mechanism in a context where the user provides uncertain relevance judgments.

keywords: content-based retrieval, MRI, medical diagnosis, Alzheimer's disease, image-based knowledge discovery,

uncertain user models, meta-data integration, neuropsychiatric tests

people: Ahmet Ekin (Philips Research), Aytül Erçil (Sabancı), Devrim Ünay (Sabancı), Octavian Soldea (Sabancı)

funding: EU 6th Framework Programme

3D Object Retrieval* (2004-2007)

The promise of the next generation search engines is to let the user formulate her queries not only as keywords but also in terms of images, video and/or shapes. During my PhD, I worked for this to become more of a reality than a dream in an emerging domain. Nowadays, 3D models arise from a multitude of application areas such as computer aided-design, cultural heritage archival, molecular modeling and video games industry. Within the decade, the need for intelligent content-based 3D model search is thus likely to become as pressing as it currently is for ordinary images and video.

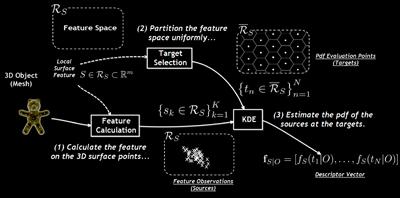

In this project, we first addressed the problem of finding a suitable shape description methodology for content-based retrieval of complete 3D models. Our probabilistic generative description scheme -the density-based framework (DBF) describes 3D models via multivariate pdfs of their local surface features.

Descriptors in this framework are relatively insensitive to small shape perturbations and mesh resolution, and they can be computed very efficiently thanks to the fast Gauss transform algorithm. Furthermore, with its permutation property, DBF allows invariant matching of 3D models for a certain class of transformations. DBF qualifies as one of the best 3D shape descriptors, as established by extensive retrieval experiments on several databases. Our PAMI paper provides an in-depth analysis of this framework.

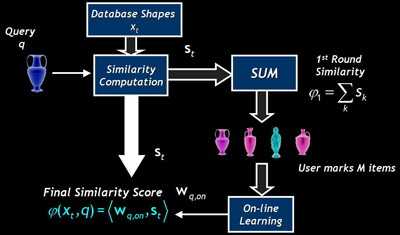

Content-based retrieval can benefit a lot from user interaction and machine learning. At later stages of the thesis project, we tackled the similarity learning problem in a user-interactive context, where typically, a user first provides her judgments (likes or dislikes) on a few initial items returned by the search engine. Based on this high-level information, the engine then returns a refined set of results. This is a setting known as relevance feedback, which has been lately abstracted as supervised classification with a small training set. In fact, the last decade has witnessed an extensive use of discriminative methods such as SVM or boosting in a variety of application areas.

In our approach, we also relied on the discriminative paradigm but with the ranking risk, which is a less studied criterion than classification error in the context of relevance feedback. For retrieval problems, this choice is not only more sound than using classification error but also enables us to write down an explicit linear similarity model, which we can optimize efficiently using off-the-shelf solvers.

Our Eurographics?8 paper presents early results of this approach. Later on, we improved upon the linear model by modeling its component similarities in terms of relevance posteriors corresponding to different shape descriptors. The posterior parameters are learned off-line using a representative set of training items. For 3D object retrieval, this joint use of on-line and off-line learning methods not only improved the performance significantly as compared to the totally unsupervised case, but also outperformed benchmark methods such as SVMs. A detailed version of this work that includes posterior relevance modeling is scheduled to appear in the IJCV Special Issue on 3D Object Retrieval in 2010.

keywords: content-based retrieval, 3D models, shape description, invariant shape matching, multivariate density estimation, 3D surface geometry, similarity learning, statistical ranking,

relevance feedback, 3D shape ontologies and ontology-driven search

people: Bülent Sankur (thesis supervisor, Bogazici EE/BUSIM), Francis Schmitt (thesis supervisor, Télécom ParisTech/TSI),

Yücel Yemez (thesis co-supervisor, Ko?CMPE), Helin Dutagaci (NIST)

funding: CNRS, TUBITAK, CROUS

Exploratory Data Analysis for fNIRS Signals* (2002-2004)

The analysis of functional near infrared spectroscopy (fNIRS) signals is the core topic of my MS thesis. This was a very exciting project, standing at the crossroads of statistical signal processing, data mining and computational neuroscience. My MS thesis has been one of the first academic contributions that hopefully added to the understanding of fNIRS signals [ see publications ].

Functional NIRS is an emerging non-invasive brain imaging modality. It’s a much less expensive technology than fMRI and/or PET, and reported as less discomforting by monitored patients. Although it cannot currently provide the spatial resolution of other technologies, fNIRS can be effectively used to monitor the hemodynamic changes in the human brain during functional cognitive activity. As such, fNIRS data analysis can complement fMRI in the challenging task of uncovering the mysteries of the human brain.

In this project, we developed a collection of data analysis tools to understand fNIRS signals acquired during functional activity of the human brain (e.g., measured during an oddball experiment). We carried out extensive hypothesis tests to statistically characterize fNIRS short-time signals, which we later described in the time-frequency plane. We partitioned the signal spectrum into several dissimilar subbands using an hierarchical clustering procedure. The discovered subbands have been observed to correspond to different types of activities of the human brain and/or other physiological changes. Furthermore, the proposed subband partitioning scheme is generic in that it can be applied to other types of biomedical signals. For a detailed description of this work, see our 2005 paper in the Journal of Computational Neuroscience.

At a second stage, we extensively used exploratory data analysis tools, such as independent component analysis and waveform clustering, to discover generic cognitive activity-related signal patterns. These are the counterparts of the brain hemodynamic response in fMRI. Evidence show that cognitive activity measured by fNIRS reveals itself in a way very similar to the one measured by fMRI. It seems that fNIRS is likely to play a bigger role in explaining cognitive activity of the human brain in the near future. This work appeared in Medical & Biological Engineering & Computing in 2006.

keywords: computational neuroscience, functional data mining and description, independent component analysis, hierarchical clustering, B-spline processing, time-frequency analysis, functional near infrared spectroscopy, cognitive tasks

people: Bülent Sankur (thesis supervisor, Bogazici EE/BUSIM), Ata Akın (thesis co-supervisor, Bogazici BME/Biophotonics)

funding: TUBITAK

DIRAC* (2008)

DIRAC (Diagnostic Radiological Consultation Platform) is a joint initiative of Bogazici University Volumetric Visualization Group (VAVlab) and Stanford University 3D Radiology Lab. The platform is envisioned to aid the radiologists during their diagnostic reading of radiological images, in much the same way a radiologist would get help from a colleague.

DIRAC would be a relational database with semantic understanding and effective indexing of medical 2D/3D images based on their similarity. As a complete consultation system, it will also incorporate other forms of information and learn semantic relations. Along with a rich and varied set of descriptors and associated indexing schemes, the platform will include an interface that allow the users to submit new data and queries.

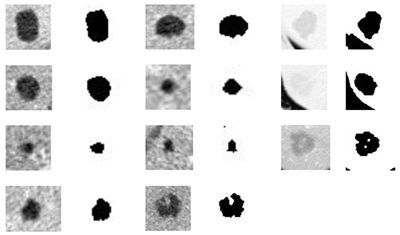

During my short term involvement in the DIRAC project, I prepared an extensive survey of medical retrieval systems and prototyped a segmentation module for liver lesions based on Markov Random Fields.

keywords: diagnostic radiology, case-based search in medical databases, medical image segmentation

people: Burak Acar (Bogazici EE/VAVlab), Daniel Rubin (Stanford/Radiology), Christopher Beaulieu (Stanford/Radiology), Sandy Napel(Stanford/Radiology)

funding: TUBA-GEBIP

Image Analysis and Segmentation (2004-2006)

One of the nice things during PhD were the self-driven projects that I pursued with my PhD fellows. These works addressed fundamental image analysis problems with advanced techniques and led to several international conference papers [ see publications ].

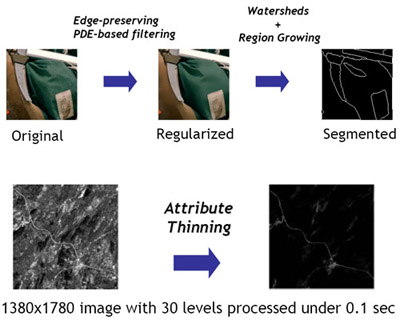

With Erdem Yoruk (JHU), we tackled the problem of color image segmentation by PDE-based regularization and mathematical morphology. We proposed an effective solution for the over-segmentation problem inherent to the watershed algorithm by preprocessing images with PDE-based edge-preserving filters. Operationally speaking, via a joint treatment of the three color channels, smoothing is forced to stop at edges and it effectively supresses irrelevant color gradients in perceptually homogeneous areas. This greatly reduces the number of catchment basins that the watershed flooding would otherwise label as image segments. Any additional spurious segment can then be eliminated by basic region merging.

With Jérome Darbon (UCLA) and David Lesage (Télécom ParisTech), we developed fast algorithms for attribute-based morphological processing of grayscale images. Such operations (openings/closings, thinnings/thickenings) have the nice property of being connected in the sense that they do not move the contours of the original image. These methods can be efficiently used to simplify an image and/or enhance its contours. As such, they can serve as preprocessing tools for segmentation. They can also be applied for elongated structure detection and quantification.

keywords: morphological image processing, PDE-based image regularization

people: Jerome Darbon (UCLA Math), David Lesage (Télécom ParisTech/TSI), Erdem Yörük (JHU Applied Math)

Exploratory Data Analysis Methods for Image Processing (2002-2004)

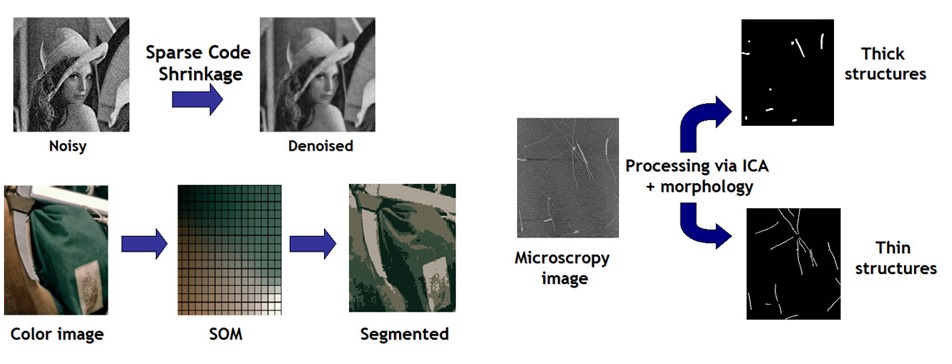

During my MS project, I mastered on exploratory data analysis tools like independent component analysis (ICA), self-organizing maps (SOM), and clustering that I applied on several image-related problems such as denoising, segmentation, and structure detection.

keywords: color image segmentation, image denoising, microscopic structure detection, independent component analysis, self-organizing maps

Automated Fish Classification from Underwater Images

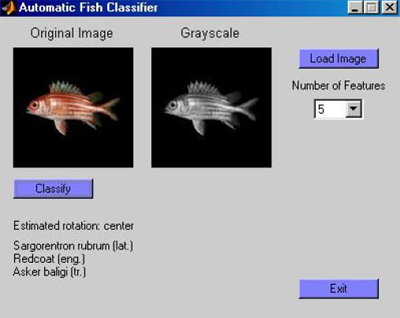

In this small project, I implemented a basic recognition system that can discriminate between 10 different mediterranean fish specifies in a database of 100 images. Fish can have arbitrary in-plane rotations, but background segmentation is assumed. The correct classification rate of the system was at around 80% using a minimum distance classifier with PCA-based image features. There is certainly a large room for improvement using more sophisticated texture, color, and shape features. I had a lot of fun during the development and I will certainly go back to try new things. After all, I'm sure everyone anyone can think of many potential real-world applications to this. For instance, wouldn't be nice to have an underwater camera that could tell us the identity of the fish we shoot... by optics of course?

keywords: object recognition and classification, PCA-based image features